Read Data From File

We will read an example data file with two NIRS channels, recording an incremental ramp cycling assessment. A few example NIRS data files are included in the mNIRS package.

First, we load in the mNIRS package and any other required libraries. mNIRS can be installed with devtools::install_github("jemarnold/mNIRS").

Specify file path

-

file_path: We need to specify the file path to read from. e.g.r("C:\myfolder\mNIRS_file.xlsx").

We will read in the file_path to the included example data file.

file_path <- system.file("extdata/moxy_ramp_example.xlsx", package = "mNIRS")Now we can read the data file with the read_data() function. This function will take in raw NIRS data exported from a device, and return a dataframe with the data channels we specify. See ?read_data for more details.

Specify column names

We need to tell the function what column names to look for, to identify our data table in the file. The data table may not be at the very top of the file, so these column names can be anywhere in the file.

nirs_columns: At minimum, we need one column name defined for NIRS data.sample_column: Typically, we will also specify a column for the time or sample number of each observation.event_column: We can specify a column to indicate laps or specific events in the dataset.

These names should be in quotations and exactly match the column headers in the file (case- and special character-sensitive). Multiple columns can be accepted for nirs_columns. We can rename these columns when importing our data in the format:

nirs_columns = c(new_name1 = "file_column_name1",

new_name2 = "file_column_name2")Other read_data() options

sample_rate: The sample rate of the NIRS recording can be specified if known, as number of samples per second, in Hz. If left blank, the function will estimate the sample rate fromsample_column, assuming it contains time values.numeric_time: Ifsample_columnis in a date-time format (e.g.hh:mm:ss), we can convert this to numeric withnumeric_time = TRUE. This may read the date-time format as starting from a non-zero value. This can be manually corrected later with some basic data wrangling.keep_all: Once the data table in the file is identified, the default is to return only the columns names explicitly specified above. If we want to return all columns in the data table, we can setkeep_all = TRUE.verbose: Finally, the function may return warnings or messages, for example if there are duplicate values insample_columnwhich may indicate a recording issue. These informative messages can be useful for data validation, but can be silenced withverbose = TRUE. Fail-state errors will always be returned.

data_raw <- read_data(file_path,

nirs_columns = c(smo2_left = "SmO2 Live",

smo2_right = "SmO2 Live(2)"),

sample_column = c(time = "hh:mm:ss"),

event_column = c(lap = "Lap"),

sample_rate = 2, ## we know this file is recorded at 2 samples per second

numeric_time = TRUE, ## to convert the date-time string to numeric

keep_all = FALSE, ## to keep the returned dataframe clean

verbose = TRUE) ## show warnings & messages, but ignore them for now

#> Warning: `sample_column = time` has non-sequential or repeating values.

#> ℹ Consider investigating at "time = 211.99, 211.99, 211.99, 1184, and 1184".

## ignore the Warning about repeated samples here

data_raw

#> # A tibble: 2,203 × 4

#> time lap smo2_left smo2_right

#> <dbl> <dbl> <dbl> <dbl>

#> 1 0 1 54 68

#> 2 0.4 1 54 68

#> 3 0.96 1 54 68

#> 4 1.51 1 54 66

#> 5 2.06 1 54 66

#> 6 2.61 1 54 66

#> 7 3.16 1 54 66

#> 8 3.71 1 57 67

#> 9 4.26 1 57 67

#> 10 4.81 1 57 67

#> # ℹ 2,193 more rowsData wrangling

Note our time column does not start at zero, because we converted from hh:mm:ss. Let’s quickly fix that, then we’ll plot the data.

We can do any other data wrangling steps here, as we would for any data file. I prefer to use tidyverse for dataframe wrangling.

data_time_fixed <- data_raw |>

mutate(time = time - first(time))

## {mNIRS} data can be plotted with a built in call to `plot()`

plot(data_time_fixed)

Metadata stored in mNIRS.data dataframes

Dataframes read or processed by mNIRS functions will return class = mNIRS.data and contain metadata, which can be retrieved with attributes(data).

Instead of re-defining our column names or sample rate, we can call them from the metadata. Some mNIRS functions will automatically retrieve metadata if present.

nirs_columns <- attributes(data_raw)$nirs_columns

nirs_columns

#> [1] "smo2_left" "smo2_right"

sample_rate <- attributes(data_raw)$sample_rate

sample_rate

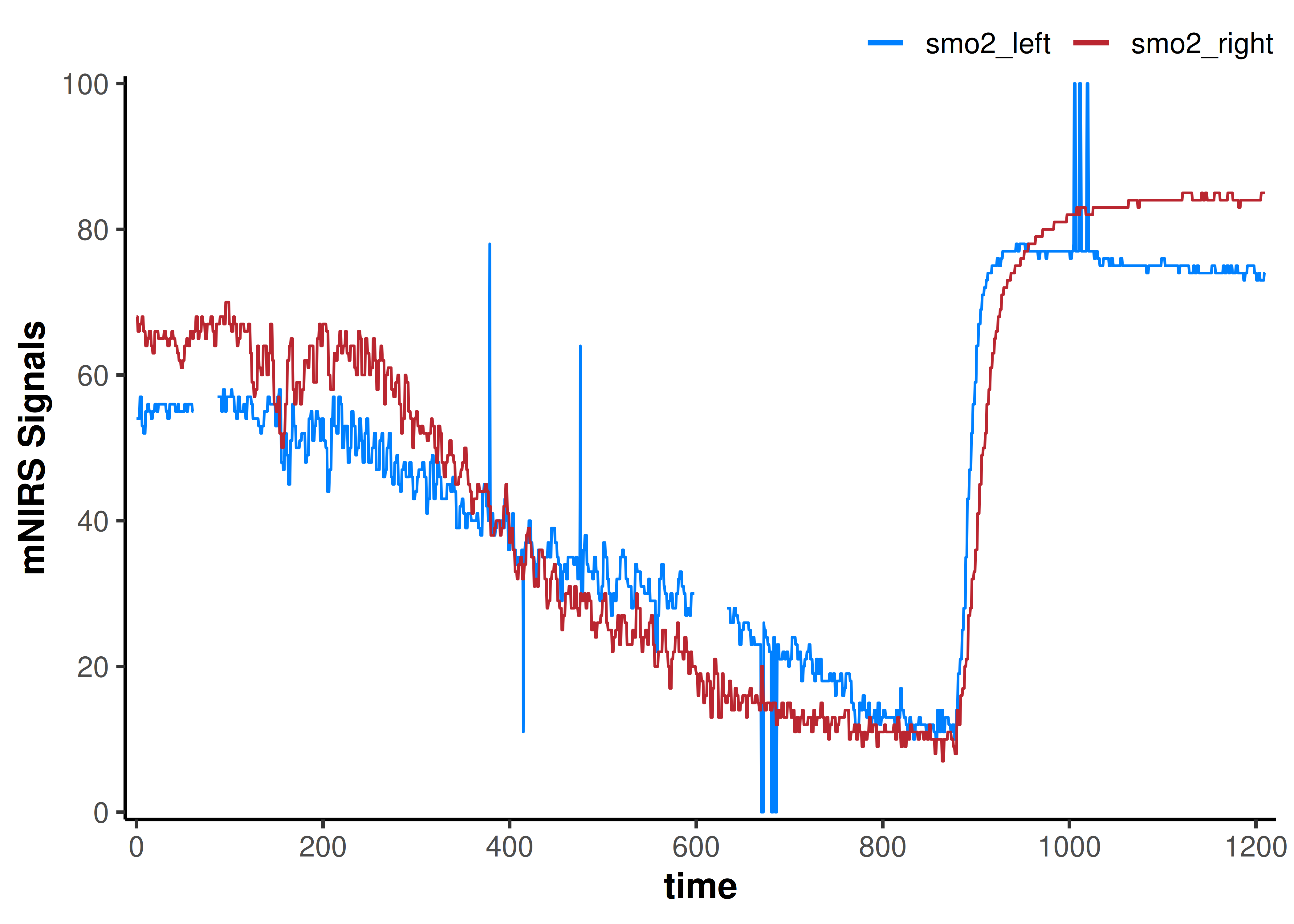

#> [1] 2Replace Outliers, invalid Values, and Missing Values

We can see some errors in the data signals, so let’s clean those up.

We can do some simple data wrangling steps to clean the invalid data and prepare it for digital filtering and smoothing.

-

x: These threereplace_*functions work on vector data; they take in a single data channel (x), apply processing to that channel, and return a vector of the processed channel data.

replace_outliers()

We can identify local outliers using a Hampel filter and replace them with the local median value. See ?replace_outliers for details.

width: The number of samples (window) in which to detect local outliers. To define the window in seconds, multiply the desired number of seconds by the sample rate.t0: The number of standard deviations outside of which are detected as outliers, defaulting to 3 (Pearson’s rule).na.rm: ATRUE/FALSEvalue indicating whether missing values (NA) should be ignored before the Hampel filter is applied.return: Indicates whether outliers should be replaced by the local “median” value or by “NA”.

Note that with relatively low sample rates, such as this 2 Hz file, outlier filters may occasionally over-filter and ‘flatten’ sections of the data. This is observed in this example near the end of the file, were the data trend is quite flat already.

replace_invalid()

Some NIRS devices or recording software can report specific invalid values, such as c(0, 100, 102.3). These can be manually removed with replace_invalid(). See ?replace_invalid for details.

values: A vector of numeric values to be replaced.width: The number of samples (window) in which the local median will be calculated. To define the window in seconds, multiply the desired number of seconds by the sample rate.return: Indicates whether invalid values should be replaced by the local “median” value or by “NA”.

replace_missing()

Finally, We can use replace_missing() to interpolate across missing data. Other fill methods are available, see ?replace_missing for details.

method: Specify the method for filling missing data. The default “linear” interpolation is usually sufficient.na.rm: ATRUE/FALSEvalue indicating when leading or trailingNAs remain after processing, should they be included or omitted.maxgap: Specify the maximum number of consecutiveNAs to fill. Any longer gaps will be left unchanged.

Subsequent processing & analysis steps may return errors when missing values are present. Therefore, it is a good habit to identify and deal with them early during data processing.

data_cleaned <- data_time_fixed |>

mutate(

across(any_of(nirs_columns), ## apply function across all of our `nirs_columns`

\(.x) replace_outliers(x = .x,

width = 20 * sample_rate, ## 20 sec window

na.rm = TRUE, ## ignore `NA`

return = "median")

),

across(any_of(nirs_columns),

\(.x) replace_invalid(x = .x,

values = c(0, 100), ## known invalid values

width = 20 * sample_rate,

return = "NA")

),

across(any_of(nirs_columns),

\(.x) replace_missing(x = .x,

method = "linear", ## linear interpolation

na.rm = FALSE, ## to preserve the length of the vector

maxgap = Inf) ## interpolate across gaps of any length

),

)

plot(data_cleaned)

That got rid of all the obvious data issues.

Downsample Data

Say we are interested in phenomena occurring on a time scale of 5-minutes, and we have NIRS data recorded at 50 Hz, but something like power data recorded at only 1 Hz. It may be easier to synchronise our data and faster to work with fewer samples if we down-sample the NIRS data also to 1 Hz.

downsample_data() options

data: This function works on a dataframe; it will take in a dataframe (data), apply the processing step to all data channels, and return the processed dataframe.sample_column: If the dataframe already has mNIRS.data metadata, the time or sample column will be detected automatically. Otherwise, we can define or overwrite it explicitly.sample_rate: If the dataframe already has mNIRS.data metadata, the sample_rate will be detected automatically. Otherwise, we can define or overwrite it explicitly.downsample_rate: Specify the output sample rate we want to covert to, as a number of samples per second (Hz). This value should be lower than the inputsample_rate, or else it won’t do anything.downsample_time: Alternatively, we can specify the output as a number of seconds per sample to produce the same result.

This example dataset is already at relatively low sample rate, but let’s just see what it looks like if for some reason we wanted to down-sample further to 0.1 Hz (1 sample every 10 sec).

data_downsampled <- data_cleaned |>

downsample_data(sample_column = NULL, ## will be automatically read from metadata

sample_rate = NULL, ## will be automatically read from metadata

downsample_time = 10) ## equal to `downsample_rate = 0.1`

#> ℹ Sample rate = 2 Hz. Output is downsampled at 0.1 Hz.

data_downsampled

#> # A tibble: 121 × 4

#> time lap smo2_left smo2_right

#> <dbl> <dbl> <dbl> <dbl>

#> 1 0 1 54.1 66.7

#> 2 10 1 55.1 64.5

#> 3 20 1 55.8 65.4

#> 4 30 1 55.7 65.1

#> 5 40 1 55.5 62.8

#> 6 50 1 55.7 64.4

#> 7 60 1 55.3 66.2

#> 8 70 1 56.1 66.7

#> 9 80 1 56.8 66.4

#> 10 90 1 56.6 68.5

#> # ℹ 111 more rows

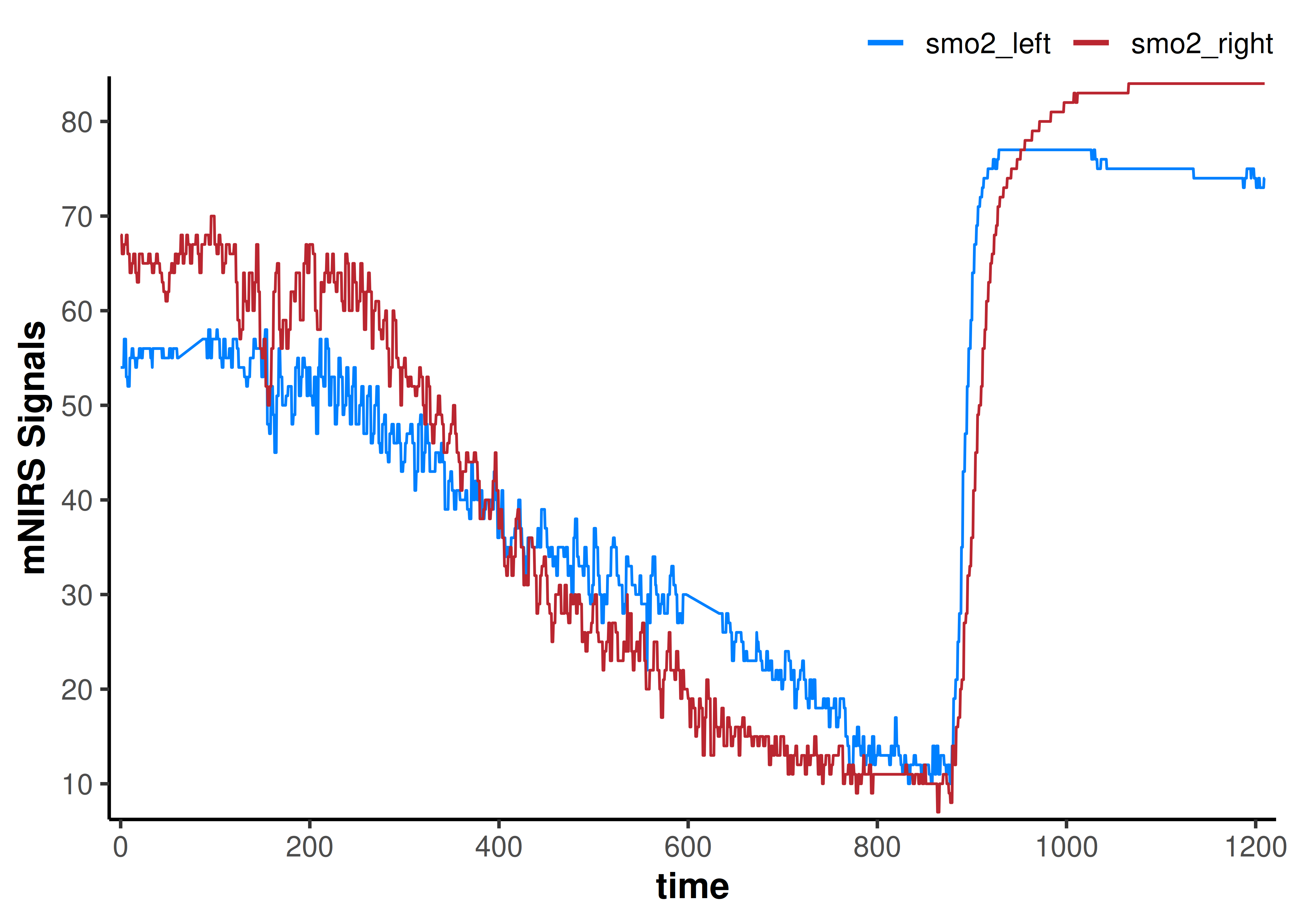

plot(data_downsampled)

The data channels certainly look smoother. This is kind of like we have taken a 10-second moving average of the data, but we have lost information by decreasing the number of samples. Our dataframe now has only 121 rows, compared to the original 2203 rows.

Digital Filtering

If we want more precise control to improve our signal-to-noise ratio in our dataset without losing information, we should apply digital filtering to smooth the data.

Choosing a digital filter

There are a few digital filtering methods available. Which option is best will depend in large part on the sample rate of the data and the frequency of the phenomena being observed.

Choosing filter parameters is an important processing step to improve signal-to-noise ratio and enhance our subsequent interpretations. Over-filtering the data can introduce data artefacts which can influence the signal analysis just as much as the original noisy signal.

It is perfectly valid to choose a digital filter by empirically testing iterative filter parameters until the signal or phenomena of interest is optimised for signal-to-noise ratio and minimal data artefacts.

The process of choosing a digital filter will be the topic of another vignette <currently under development>.

filter_data() methods

-

x: This function works on vector data; it takes in a single data channel (x), applies processing to that channel, and returns a vector of the processed channel data.

Smoothing-spline

-

method = "smooth-spline": A non-parametric smoothing spline is often quite good as a first pass filter when first examining the data. This can often be good enough for longer time-scale phenomena, such as a 5-minute exercise interval, or an intervention with a gradual response curve. For faster occurring or repeated (square-wave) responses, a smoothing-spline may not be appropriate.

Butterworth digital filter

-

method = "butterworth": A Butterworth low-pass digital filter is probably the most common method used in mNIRS research (whether appropriately, or not). For certain applications, such as identifying a signal with a known frequency, such as cycling/running cadence or heart rate, a pass-band or a different filter type may be better suited.

Moving average

-

method = "moving-average": The simplest smoothing method is a simple moving average applied over a specified number of samples. Commonly, this might be a 5- or 15-second centred moving average filter.

Apply the filter

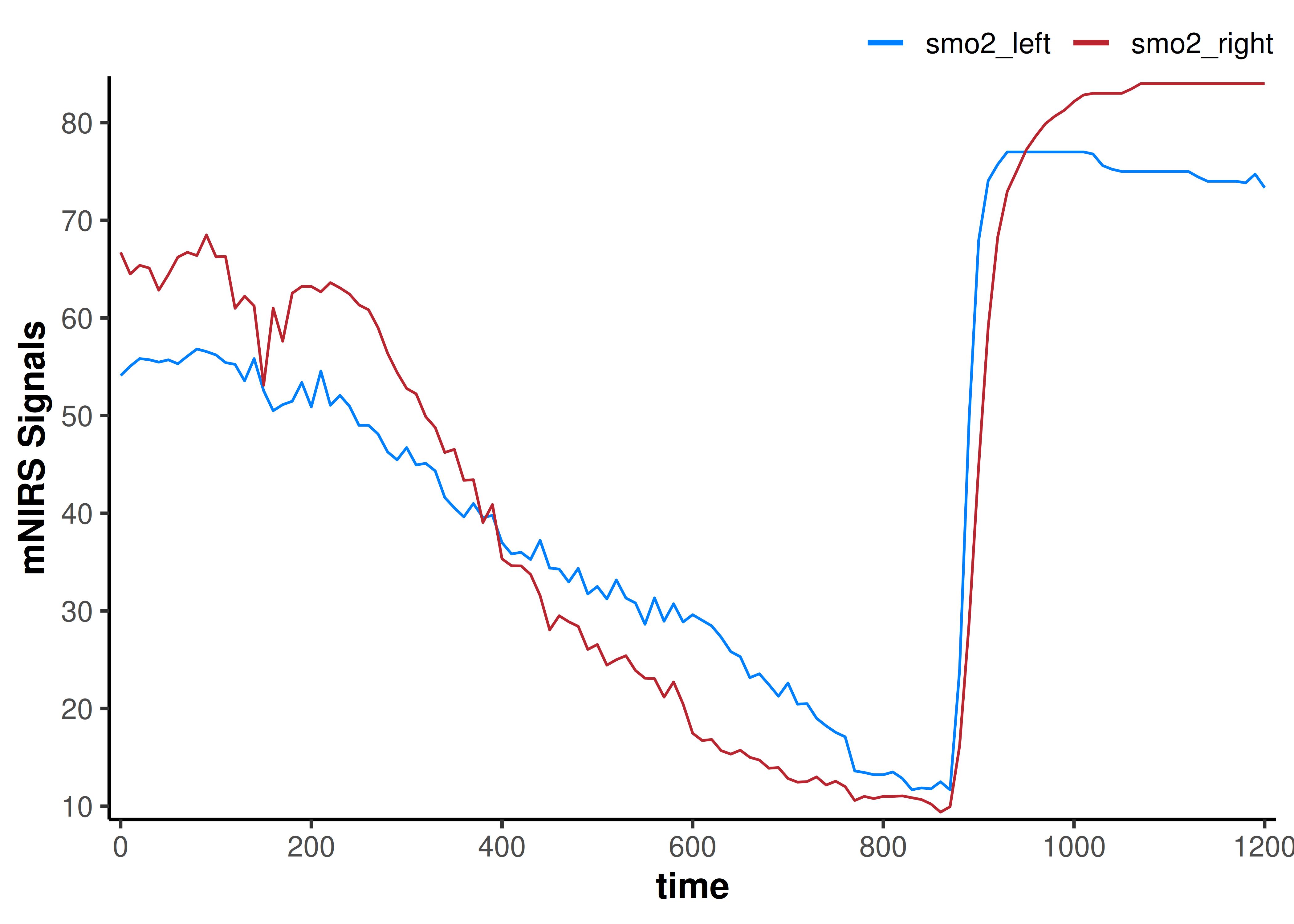

Let’s try a Butterworth low-pass filter, and we’ll specify some empirically chosen filter parameters. See ?filter_data for further details on each of these filtering methods and their respective parameters.

data_filtered <- data_cleaned |>

mutate(

across(any_of(nirs_columns),

\(.x) filter_data(x = .x,

method = "butterworth",

type = "low",

n = 2, ## see ?filter_data for details on filter parameters

W = 0.02)

)

)

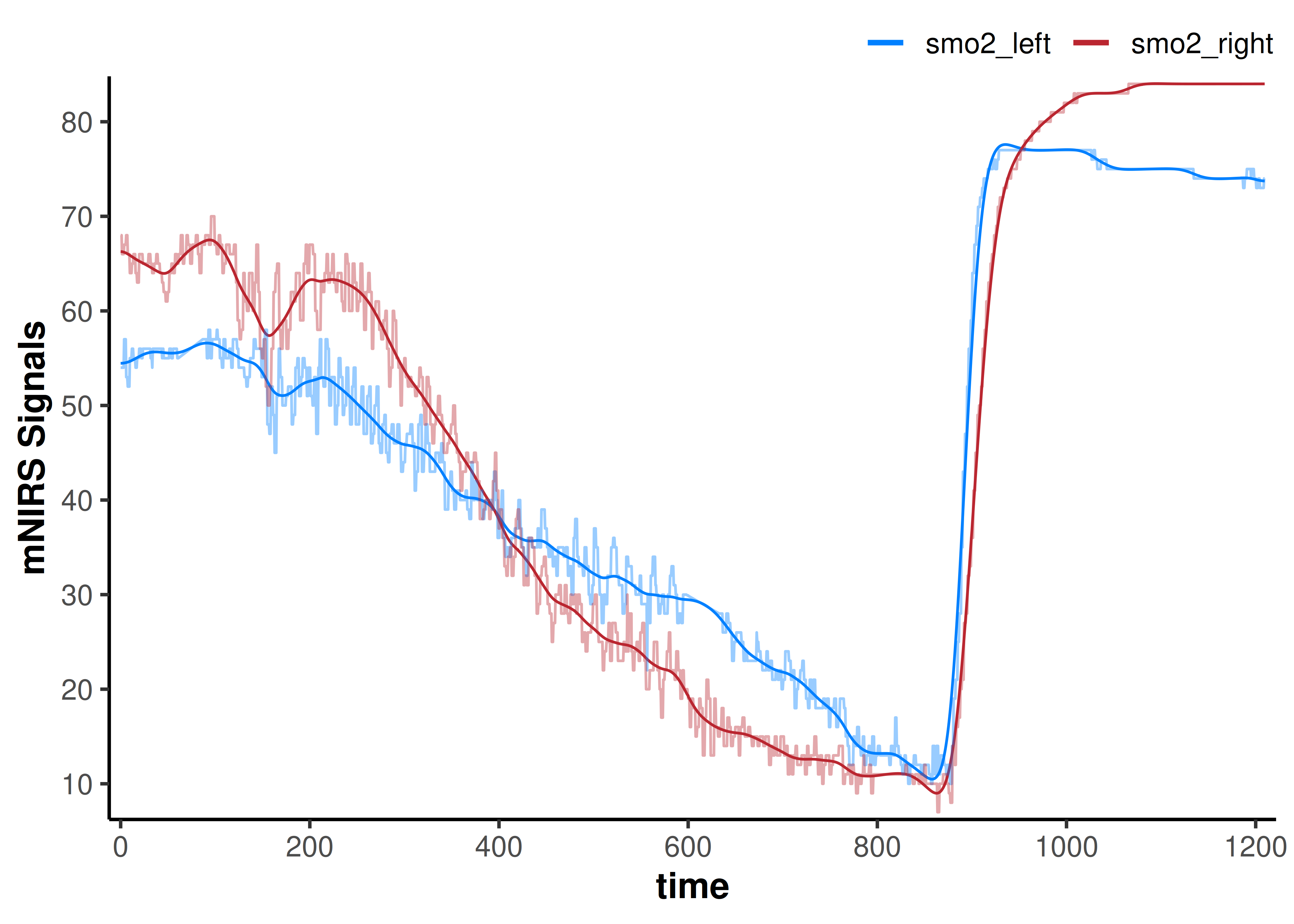

## we will add the non-filtered data back to the plot to compare

plot(data_filtered) +

geom_line(data = data_cleaned,

aes(y = smo2_left, colour = "smo2_left"), alpha = 0.4) +

geom_line(data = data_cleaned,

aes(y = smo2_right, colour = "smo2_right"), alpha = 0.4)

Shift and Rescale Data

We may need to adjust our data to normalise NIRS signal values across channels, or between individuals or trials, etc. For example, we may want to set our mean baseline value to zero for all NIRS signals. Or we may want to compare signal kinetics (the rate of change or time course of a response) after rescaling to the same relative dynamic range.

These functions allow us to either shift values and preserve the dynamic range (the delta amplitude from minimum to maximum values) of our NIRS channels, or rescale the data to a new dynamic range.

We can do this on multiple NIRS channels and either modify or preserve the relative scaling between those channels.

shift_data() options

We may want to shift our data values while preserving the absolute dynamic range. See ?shift_data for details.

data: This function works on a dataframe; it will take in a dataframe (data), apply the processing step to all data channels, and return the processed dataframe.nirs_columns: A list specifying how we want the NIRS data channels to be grouped, to preserve within-group relative scaling. Listing each channel separately (e.g.list("A", "B", "C")) will shift each channel independently. The relative scaling between channels will be lost.

Grouping channels (e.g.list(c("A", "B"), c("C"))) will shift each group of channels together. The relative scaling between channels will be preserved within each group, but lost across groups.

If the dataframe already has mNIRS.data metadata, the NIRS data channels will be detected automatically and processed as if grouped globally together.shift_to: The NIRS value to which the channels will be shifted.position: Specifies how we want to shift the data; either shifting the “minimum”, “maximum”, or “first” sample(s) to the value specified above.mean_samples: Specifies how many samples we want to average across when shifting to our new value specified above. The defaultmean_samples = 1will shift a single value.shift_by: An alternate way to specify, if we wanted to shift a data column by, say 10 units.

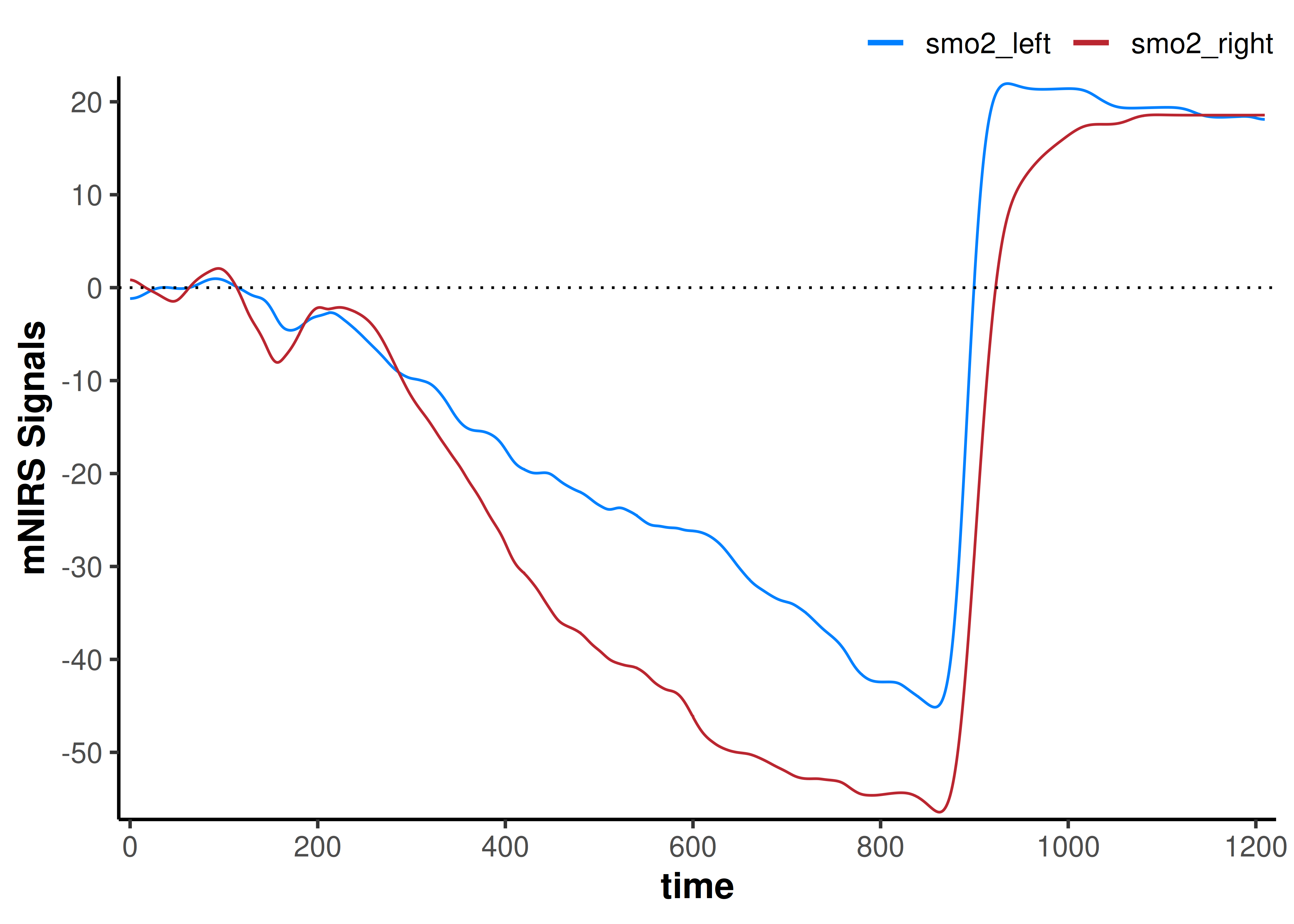

We have a 2-minute baseline for this dataset, so maybe we want to shift both NIRS signals so that the mean value of the 2-min baseline is equal to zero.

data_shifted <- data_filtered |>

## convert `nirs_columns` to separate list items to shift each column separately

shift_data(nirs_columns = as.list(nirs_columns),

shift_to = 0,

position = "first",

mean_samples = 120 * sample_rate) ## shift the mean first 120 sec equal to zero

plot(data_shifted) +

geom_hline(yintercept = 0, linetype = "dotted")

Now our interpretation may change; if we are assuming the baseline represents the same starting condition, our smo2_left signal does not deoxygenate as far as our smo2_right signal.

rescale_data() options

We may want to rescale our data to a new dynamic range. See ?rescale_data for details.

data: This function works on a dataframe; it will take in a dataframe (data), apply the processing step to all data channels, and return the processed dataframe.nirs_columns: A list specifying how we want the NIRS data channels to be grouped, to preserve within-group relative scaling (see Shift Data above).rescale_range: Specifies the new dynamic range, in the formc(minimum, maximum).

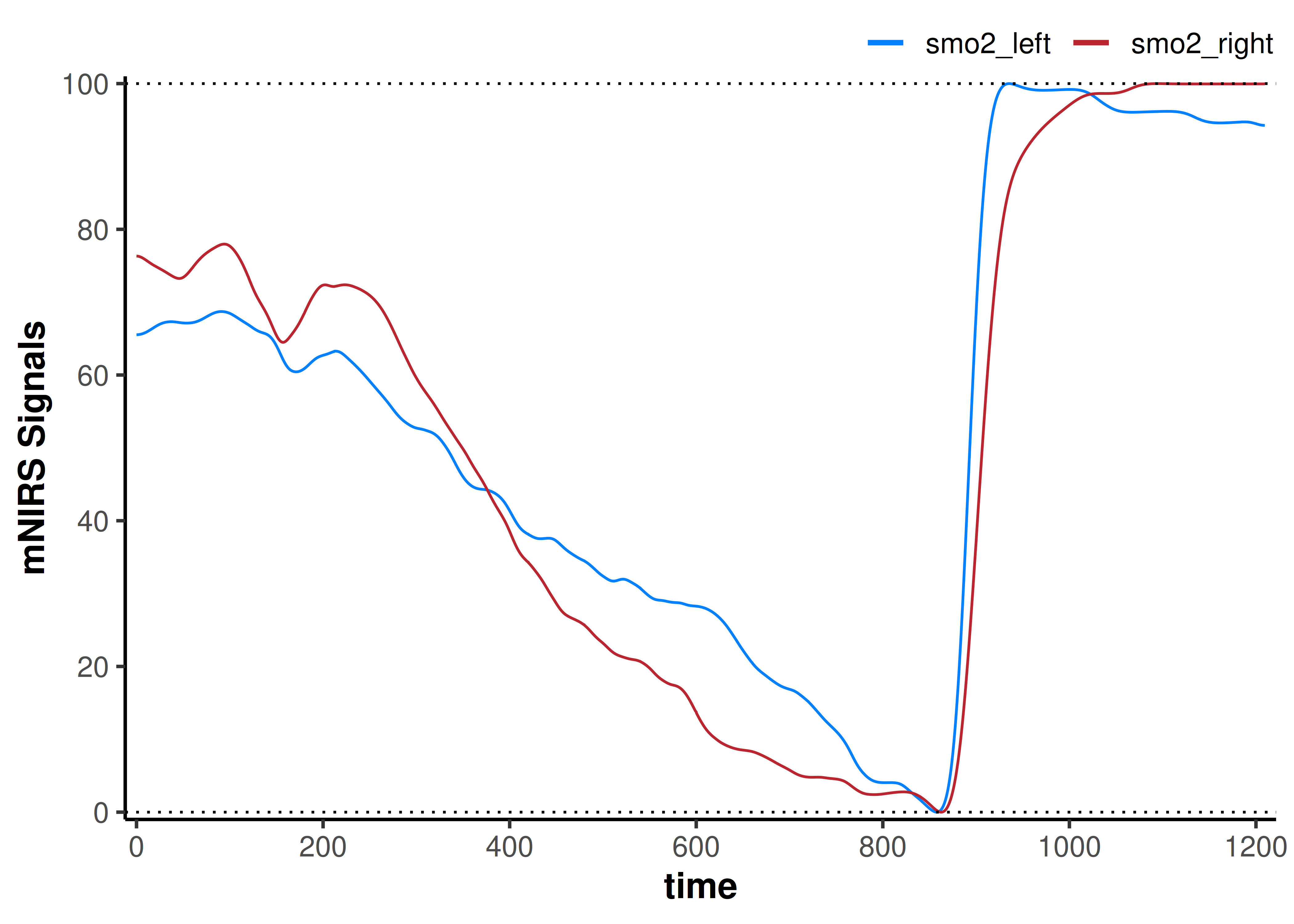

For example, if we are interested in comparing the ‘functional range’ of each NIRS signal, we may want to set them both to 0-100%.

data_rescaled <- data_filtered |>

## convert `nirs_columns` vector to separate list items to shift each column separately

rescale_data(nirs_columns = as.list(nirs_columns),

rescale_range = c(0, 100)) ## rescale to a 0-100% functional exercise range

plot(data_rescaled) +

geom_hline(yintercept = c(0, 100), linetype = "dotted")

Here, our interpretation may be that smo2_left appears to deoxygenate slower to it’s fullest extent during incremental exercise, and reoxygenate faster compared to smo2_right.

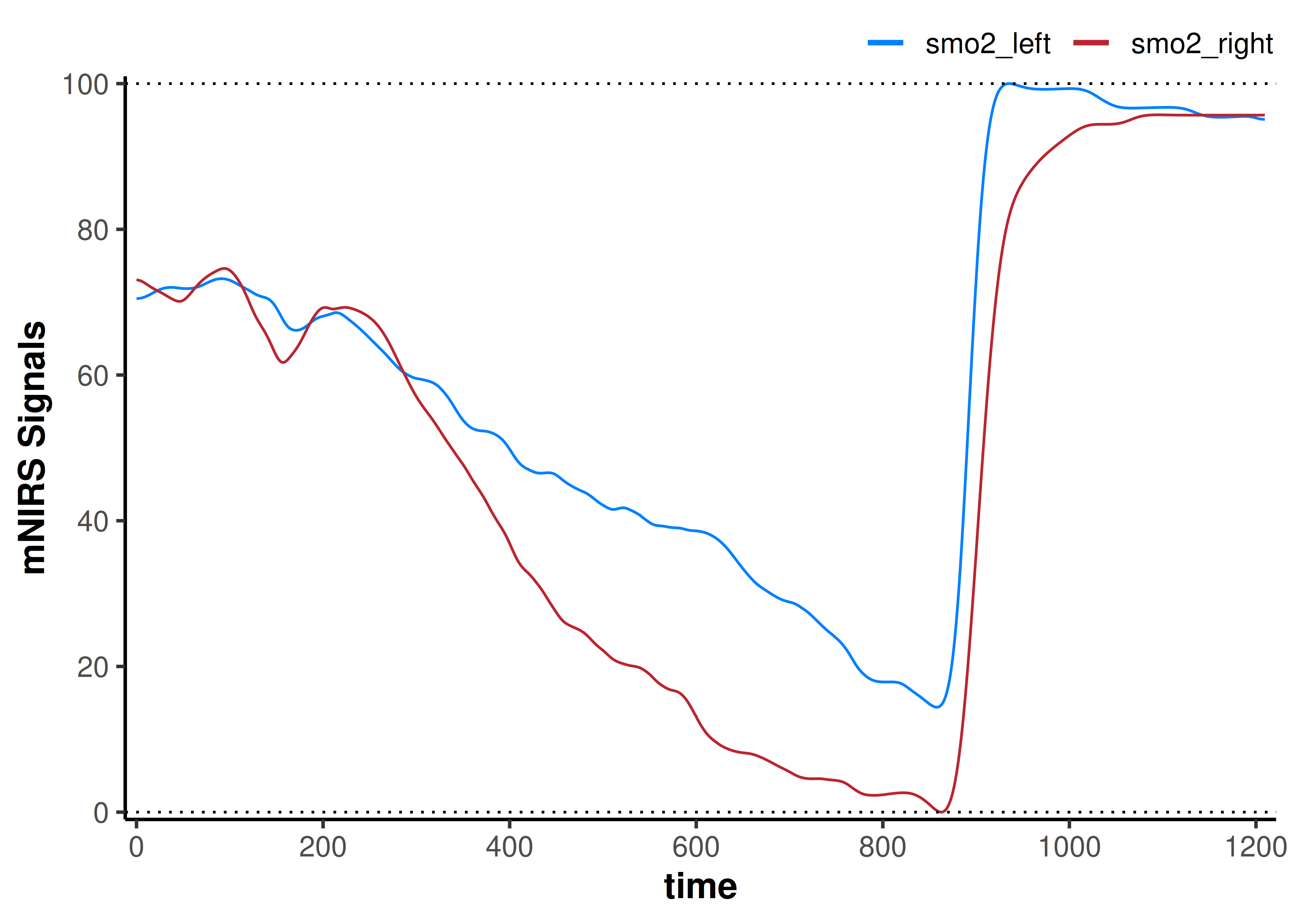

Combined shift and rescale

What if we wanted to shift both NIRS signals so their mean 120-sec baselines are equal, then rescale the dynamic range of both signals grouped, such that the highest and lowest values were from 0 to 100?

data_rescaled <- data_filtered |>

## the lowest single value for each NIRS channel will be shifted to zero

shift_data(nirs_columns = as.list(nirs_columns),

shift_to = 0,

position = "first",

mean_samples = 120 * sample_rate) |>

## Then both channels will be grouped and the maximum value of the two scaled to 100%

rescale_data(nirs_columns = list(nirs_columns),

rescale_range = c(0, 100))

plot(data_rescaled) +

geom_hline(yintercept = c(0, 100), linetype = "dotted")

Now our interpretation might be that assuming the same starting baseline condition in both tissues, smo2_left preserves greater relative oxygenation during exercise and immediate recovery compared to smo2_right.